I intend to describe in this post how the Sun Studio 12 Compilers are being used to engineer a complex, innovative high-performance computing application in the realm of discrete geometry. The Sun Studio 12 Update 1 compilers are atleast 20% faster than the GCC compilers for this application; the speedup has been achieved by using special Sun Studio 12 features which I outline below. For the impatient, the command line used was

/usr/local/sunstudio12.1/bin/CC -xO4 -xipo=2 -xproflile=use -library=stlport4 -o

The underlying research described by this application has been carried over several years (from 2004 onwards) with my friend Anand Kulkarni (PhD candidate at UC Berkeley). Over these years the compiler, OS, microprocessor have all been changed, but the fundamental methods behind the algorithm have continued to be used.

4-dimensional polytope

Let me begin by describing in simple terms what is a

polytope. Consider the figure shown above, the intersection of half-spaces defined as linear inequalities. The dimension of the polytope is defined as the dimension of the space in which the vertices of the polytope are located.

The original motivation of our research was the

Hirsch Conjecture which bounds the diameter of the graph of the polytope of in

d dimension with

n facets to be

(n-d).

Using a cutting plane method we have shown how to enumerate

all polytopes starting with the simplex as shown below.

Combinatorial Enumeration shown in three dimension

The enumeration system generates all combinatorial distinct polytopes which are then passed to the property checker which verifies the Hirsch conjecture and other open problems. The number of d-regular graphs of n vertices are exponential in n^2, so a fast analysis algorithm implementation is crucial (even when analyzing low dimensions).

Graph diameter = max {all-pairs shortest path}

Time complexity = O(n^3), n is number of vertices

Algorithm code :

function FW(C,N) for k = 1:N for i = 1:N for j = 1:N C[i][j] = min(C[i][j], C[i][k]+C[k][j]);

endfor endfor endforThe cutting plane and facet perturbation algorithm uses complex graph theoretic data structures.

Of-course, since the application is written in standardized C++, GNU g++ also compiles the application, but as we demonstrate, using Sun Studio 12 Compilers allowed us to experiment with data-structures, perform automated performance tuning and overall presented a better environment for complex algorithmic coding, where the scientific researcher uses the programming environment to not only develop the code, but also to document and collaborate about the algorithm and methods used in the application.

- Generate all d-dimensional polytopes having less than n facets,

- Analyze the d-regular graphs arising out of these polytope for graph theoretic properties,

- Attempt to solve open conjectures like the Hirsch conjecture using combinatorial enumeration.

A reference to the algorithm is given at http://ieor.berkeley.edu/~anandk/polyenum.pdf

It should be noted that uptil now no computer algorithm for generating polytopes was known, let alone successfully implemented. Generating random polytopes of a known dimension is a far cry from generating all polytopes of a dimension and facet count (which is what we have implemented).

The key operation in the algorithm is a facet push method which operates on the face-lattice (a higher dimension generalization of the polytope graph). The algorithm was invented by myself and Anand and has been implemented in C++ by myself. The code uses STL and pthreads heavily and is written in OS and compiler agnostic manner.

Since I was an early participant on the Sun Studio Early Access and Beta Test program and was very curious how the compiler optimization would perform for a new type of algorithm I immediately used the Sun Studio 12 compilers for developing the advanced functionality of the algorithm. Contrary to other high performance applications our problem has several salient points which taken together represent a large number of commercial C++ applications. Moreover, unlike other HPC codes our implementation does not use any standardized kernel (like LAPACK, BLAS, FFT) which the compiler can use to optimize using the 80-20 rule.

The salient points of our algorithm and its C++ implementation which are very relevant to business applications,

- High performance is demanded: analyzing combinatorial properties of graphs grows doubly exponential in the number of vertices in the graph. Even analyzing a graph with 64 vertices needed careful attention to data-structures and algorithms,

- No single easily identifiable kernel exists: unlike FFT which more often than not becomes the single most compute intensive kernel, our algorithm to generate all (n,d) polytopes uses (i) graph isomorphism using vertex orbits, (ii) polytope face lattice manipulation and (iii) recursive enumeration,

- Irregular and nested parallelism opportunities: since we generate polytopes inductively starting from the simplex (in 3d a tetrahedron is a simplex), there is not a simple for(i=0;i

- Distributed hash and file level operations: we needed to save the millions of polytopes we were producing, and since we want to filter duplicated we used a distributed hash,

- Platform compatibility: primary code was developed on a dual-Opteron running Gentoo Linux (officially not supported by Sun Studio 12, but installed like a charm), and on Solaris boxes (both Opteron and SPARC),

- STL was heavily used: the polytope and the graph were represented as STL objects, with std::set, std::list, std::vector, std::map all being heavily used.

Installing Sun Studio 12 Compilers on Solaris and Linux: This was straightforward in both cases and now I have /opt/ss12/bin/CC -V

CC: Sun C++ 5.10 Linux_i386 2009/06/03

Even during the initial design and prototype of the algorithm, key facilities of the Sun IDE such as code folding, automatic file templates and especially the 80-column warning were useful. For someone who has extensively programmed on both Emacs and Visual Studio, having the ideal combination of "dmake" underlying the '+' code folding, and that too on

both Linux and Solaris with the same project was nice. Automatic CVS and SVN checkout also helped.

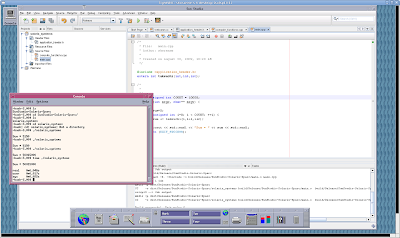

Automatic Task List generation: a feature which I have used extensively. During code development I am used to writing //TODO as a mechanism for writing notes to myself, denoting code which can later be improved or enhanced. Sun Studio 12 automatically parsed my description and generated a list of items in the

Tasks panel, as shown below:

Runtime comparison of SS 12 vs GCC 4.4.0 (SVN checkout) consistently favored SS12 especially when using profile guided optimization. Since modern processors have deep and speculative pipelines, compilers have to guess (using heuristics) which branch to favor when generating code. Something which may be obvious to the code reader (human), eg

if( NextToImpossibleCase( polytope ) ) { Discard( polytope); }

else {

// let me spend couple of million CPU cycles on you

}

without any guidance, compilers have been told to favor the 'if' clause, this can produce bad code which wastes 10000s of CPU cycles when the branch is mis-predicted. Using profile guided optimization, where we ran a special instrumented using

-xprofile=collect, then ran this on a smallish polytope generator, like (5,10). This collects the branch probabilities and execution counts for functions and code blocks. Then do a final optimization using

-xprofile=useLinker Map File: when using template heavy code, the compiler generates many similar versions of the code in ASM which then gets converted into object. Consider what happens when you have:

template

void FuncA( void ) {}template void FuncB( U& u, V& v ) {}As more and more types are used in the function, the number of combinations increases geometrically (or exponentially, much like our polytopes). Since the compiler generates object code for each type one after another, the object files not only become big (increasing linker time), but also when mapped into memory during execution cause Instruction Cache Thrashing. This is a relatively new phenomenon excaberated by type based container and overuse of function templates. A solution around this problem is to use a Linker Map file to coalesce the commonly used functions (once a type is known usually all functions will use the same type, and thus these functions should be colocated in object, then linked .exe and finally in the memory during execution. This can easily give 10% reduction. Fortunately for us Sun Studio 12 as automated linker file optimization which can be run from the menu.-xipo : cross file optimization saved us from writing code sphageti: as this feature lets you write related code in a single file, with the compiler optimizing across files to decide inling etc.on old Intel Xeon -xprefetch optimizes more than Opteron, and even less probably on Nehalem.Know thy chip: when generating code which we know will run on special clusters it makes sense to add -xchip-xautopar : is your friend, understand it, trust it, use it.If using OpenMP use -xopenmp to support this new and very exciting way to writing parllel code. In my next project, I will use this for sure.There were several small optimizations which were enabled in SS 12 and later I found out equivalent GCC command line options, but in fairness, SS 12 introduced me to these concepts. If I had to complain, it would be on the C++ std:: namespace confusion with system headers on newer Linux distributions like Fedora 10. Here even a simple program:

#include int main() { return 0; }does not compile due to w_char header having incompatible ISO C99 recognition and so on.

Performance and experimental data is available if any one is interested, and even program binary for 64-bit is available.Let me show an example of the polytope generation produced from the actual executable:

**************** POLYTOPE ENUMERATION SOFTWARE ********************

(C) Sandeep Koranne & Anand Kulkarni. 2008

Implementation based on Table lookup facet cutting algorithm.

Dimension = 4

+------------------------------------------------------------------------------+

| F_0 | F_1 | F_2 | F_3 | F_4 | F_5 | F_6 |

+------------------------------------------------------------------------------+

4 6 4 1

F[0] = ( 0 )( 1 )( 2 )( 3 )

F[1] = ( 0 1 )( 0 2 )( 0 3 )( 1 2 )( 1 3 )( 2 3 )

F[2] = ( 0 1 2 )( 0 1 3 )( 0 2 3 )( 1 2 3 )

F[3] = ( 0 1 2 3 )

--------------------------------------------------

F_VECTOR ( 4 6 4 1 )

--------------------------------------------------

Simplex hash code = 036423479936425885023

The above fragment depicts the 3-simplex with 4 vertices and 4 facets. The

f-vector shows the number of

faces of the polytope and is an extremely useful metric. Next we compute the vertex orbits and the cut-set to produce the next set of vertices:

Computing new Cut Set using orbits---> Fixed set (in terms of logical graph index ) = ---> Fixed set in polytope coordinates :Orbit [0] = 0 1 2 3 Analyzing Orbit [0] = Orbit [0] = 0 1 2 3 Checking for facet in set : 0 Candidate cut in polytope coordinates = : 0 Computing new Cut Set using orbitsApplying the cut of we get another vertex which is the truncated pyramid or the prism,

Applying cut using : 0 Dimension = 4+------------------------------------------------------------------------------+|F_0 |F_1 |F_2 |F_3 |F_4 |F_5 | F_6 |+------------------------------------------------------------------------------+ 7 9 5 1F[0] = ( 1 )( 2 )( 3 )( 4 )( 5 )( 6 )F[1] = ( 4 1 )( 5 2 )( 6 3 )( 1 2 )( 1 3 )( 2 3 )( 4 5 )( 4 6 )( 5 6 )F[2] = ( 0 1 3 6 )( 0 2 4 7 )( 1 2 5 8 )( 3 4 5 )( 6 7 8 )F[3] = ( 0 1 2 3 4 )--------------------------------------------------F_VECTOR ( 6 9 5 1 ) --------------------------------------------------And this procedure continues till we get all 6-facet polytopes in 3-dimension.

As an example let me show the graph matrix produced when generating (4,9) polytopes;

Candidate cut in polytope coordinates = : 8 Depth = 4 |L| = 33 0 | 0 0 0 0 1 0 0 0 1 0 0 0 1 0 0 0 1 1 | 0 0 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 2 | 0 1 0 1 1 0 0 0 0 1 0 0 0 0 0 0 0 3 | 0 1 1 0 1 0 0 0 0 0 0 0 0 1 0 0 0 4 | 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 5 | 0 1 0 0 0 0 1 1 1 0 0 0 0 0 0 0 0 6 | 0 0 0 0 0 1 0 1 1 0 1 0 0 0 0 0 0 7 | 0 0 0 0 0 1 1 0 1 0 0 0 0 0 1 0 0 8 | 1 0 0 0 0 1 1 1 0 0 0 0 0 0 0 0 0 9 | 0 0 1 0 0 0 0 0 0 0 1 1 1 0 0 0 0 10 | 0 0 0 0 0 0 1 0 0 1 0 1 1 0 0 0 0 11 | 0 0 0 0 0 0 0 0 0 1 1 0 1 0 0 1 0 12 | 1 0 0 0 0 0 0 0 0 1 1 1 0 0 0 0 0 13 | 0 0 0 1 0 0 0 0 0 0 0 0 0 0 1 1 1 14 | 0 0 0 0 0 0 0 1 0 0 0 0 0 1 0 1 1 15 | 0 0 0 0 0 0 0 0 0 0 0 1 0 1 1 0 1 16 | 1 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 0Accumulated 33 cuts.Finally, let me conclude this by sharing a data-set which probably is unque;

it is the set of f-vectors of (4,11) polytopes. The first column denotes the multiplicity of the f-vector.

1 F_VECTOR ( 11 22 18 7 1 ) 3 F_VECTOR ( 14 28 22 8 1 ) 3 F_VECTOR ( 15 30 23 8 1 ) 3 F_VECTOR ( 16 32 24 8 1 ) 2 F_VECTOR ( 17 34 25 8 1 ) 7 F_VECTOR ( 17 34 26 9 1 ) 22 F_VECTOR ( 18 36 27 9 1 ) 36 F_VECTOR ( 19 38 28 9 1 ) 56 F_VECTOR ( 20 40 29 9 1 ) 30 F_VECTOR ( 20 40 30 10 1 ) 1 F_VECTOR ( 21 42 29 9 1 ) 50 F_VECTOR ( 21 42 30 9 1 ) 124 F_VECTOR ( 21 42 31 10 1 ) 1 F_VECTOR ( 22 44 30 9 1 ) 29 F_VECTOR ( 22 44 31 9 1 ) 350 F_VECTOR ( 22 44 32 10 1 ) 12 F_VECTOR ( 23 46 32 9 1 ) 799 F_VECTOR ( 23 46 33 10 1 ) 131 F_VECTOR ( 23 46 34 11 1 ) 23 F_VECTOR ( 24 48 33 10 1 ) 1296 F_VECTOR ( 24 48 34 10 1 ) 859 F_VECTOR ( 24 48 35 11 1 ) 24 F_VECTOR ( 25 50 34 10 1 ) 1643 F_VECTOR ( 25 50 35 10 1 ) 3249 F_VECTOR ( 25 50 36 11 1 ) 42 F_VECTOR ( 26 52 35 10 1 ) 1588 F_VECTOR ( 26 52 36 10 1 ) 10276 F_VECTOR ( 26 52 37 11 1 ) 24 F_VECTOR ( 27 54 36 10 1 ) 1077 F_VECTOR ( 27 54 37 10 1 ) 296 F_VECTOR ( 27 54 37 11 1 ) 23382 F_VECTOR ( 27 54 38 11 1 ) 47 F_VECTOR ( 28 56 37 10 1 ) 670 F_VECTOR ( 28 56 38 10 1 ) 618 F_VECTOR ( 28 56 38 11 1 ) 42179 F_VECTOR ( 28 56 39 11 1 ) 46 F_VECTOR ( 29 58 38 10 1 ) 360 F_VECTOR ( 29 58 39 10 1 ) 1419 F_VECTOR ( 29 58 39 11 1 ) 61743 F_VECTOR ( 29 58 40 11 1 ) 24 F_VECTOR ( 30 60 39 10 1 ) 135 F_VECTOR ( 30 60 40 10 1 ) 1774 F_VECTOR ( 30 60 40 11 1 ) 68822 F_VECTOR ( 30 60 41 11 1 ) 19 F_VECTOR ( 31 62 40 10 1 ) 31 F_VECTOR ( 31 62 41 10 1 ) 3171 F_VECTOR ( 31 62 41 11 1 ) 69914 F_VECTOR ( 31 62 42 11 1 ) 15 F_VECTOR ( 32 64 41 10 1 ) 1 F_VECTOR ( 32 64 41 11 1 ) 4939 F_VECTOR ( 32 64 42 11 1 ) 62481 F_VECTOR ( 32 64 43 11 1 ) 106 F_VECTOR ( 33 66 42 11 1 ) 6317 F_VECTOR ( 33 66 43 11 1 ) 44251 F_VECTOR ( 33 66 44 11 1 ) 175 F_VECTOR ( 34 68 43 11 1 ) 6105 F_VECTOR ( 34 68 44 11 1 ) 29255 F_VECTOR ( 34 68 45 11 1 ) 154 F_VECTOR ( 35 70 44 11 1 ) 5479 F_VECTOR ( 35 70 45 11 1 ) 16545 F_VECTOR ( 35 70 46 11 1 ) 2 F_VECTOR ( 36 72 44 11 1 ) 264 F_VECTOR ( 36 72 45 11 1 ) 3957 F_VECTOR ( 36 72 46 11 1 ) 8100 F_VECTOR ( 36 72 47 11 1 ) 1 F_VECTOR ( 37 74 45 11 1 ) 238 F_VECTOR ( 37 74 46 11 1 ) 2375 F_VECTOR ( 37 74 47 11 1 ) 3020 F_VECTOR ( 37 74 48 11 1 ) 7 F_VECTOR ( 38 76 46 11 1 ) 97 F_VECTOR ( 38 76 47 11 1 ) 943 F_VECTOR ( 38 76 48 11 1 ) 936 F_VECTOR ( 38 76 49 11 1 ) 24 F_VECTOR ( 39 78 47 11 1 ) 68 F_VECTOR ( 39 78 48 11 1 ) 345 F_VECTOR ( 39 78 49 11 1 ) 59 F_VECTOR ( 39 78 50 11 1 ) 45 F_VECTOR ( 40 80 48 11 1 ) 20 F_VECTOR ( 40 80 49 11 1 ) 51 F_VECTOR ( 40 80 50 11 1 ) 1 F_VECTOR ( 5 10 10 5 1 ) 1 F_VECTOR ( 8 16 14 6 1 ) skoranne@opti ~/N2OCT8/4_11 $ Enjoy!

Only caveat you have to share all the polytopes you generate with the broad scientific community.Have fun with your install of SS12.